Utiliser le driver delegated Molecule avec Ansible

Publié le :

Depuis quelque temps, je travaille avec la plateforme de cloud Outscale qui propose une API proche de celle d’AWS. Cela permet entre autre d’utiliser la cli d’AWS. Mais voilà, je dois développer des rôles ansible sur cette plateforme et le driver EC2 ne fonctionne pas avec Outscale. La raison les clés des réponses ne sont pas identiques à celle d’AWS. Heureusement Outscale fournit un provider Terraform. Oui, mais comment l’utiliser avec molecule ?

Driver delegated molecule

Malheureusement, la documentation du driver delegated de molecule ne contient que de quelques lignes. D’où l’importance de bien documenter ses productions.

Je me suis retroussé les manches, fait des recherches sur github, sans succès. Aucun des codes trouvés ne gère le process complet de molecule : du create au destroy …

J’ai décidé de lancer la commande pour voir ce qu’y est créé par défaut avec le driver delegated :

molecule init role --driver-name delegated --verifier-name ansible steph.test_role

INFO Initializing new role test_role...No config file found; using defaults- Role test_role was created successfully[WARNING]: No inventory was parsed, only implicit localhost is availablelocalhost | CHANGED => {"backup": "","changed": true,"msg": "line added"}Regardons ce qui est produit :

cd test_role/molecule/defaultll -ltotal 24-rw-rw-r-- 1 vagrant vagrant 171 mars 6 13:29 converge.yml-rw-rw-r-- 1 vagrant vagrant 1114 mars 6 13:29 create.yml-rw-rw-r-- 1 vagrant vagrant 599 mars 6 13:29 destroy.yml-rw-rw-r-- 1 vagrant vagrant 336 mars 6 13:29 INSTALL.rst-rw-rw-r-- 1 vagrant vagrant 142 mars 6 13:29 molecule.yml-rw-rw-r-- 1 vagrant vagrant 193 mars 6 13:29 verify.ymlC’est en ouvrant le fichier create.yml que le déclic est venu.

---- name: Create hosts: localhost connection: local gather_facts: false no_log: "{{ molecule_no_log }}" tasks:

# TODO: Developer must implement and populate 'server' variable

- when: server.changed | default(false) | bool block: - name: Populate instance config dict ansible.builtin.set_fact: instance_conf_dict: { 'instance': "{{ }}", 'address': "{{ }}", 'user': "{{ }}", 'port': "{{ }}", 'identity_file': "{{ }}", } with_items: "{{ server.results }}" register: instance_config_dict

- name: Convert instance config dict to a list ansible.builtin.set_fact: instance_conf: "{{ instance_config_dict.results | map(attribute='ansible_facts.instance_conf_dict') | list }}"

- name: Dump instance config ansible.builtin.copy: content: | # Molecule managed

{{ instance_conf | to_json | from_json | to_yaml }} dest: "{{ molecule_instance_config }}" mode: 0600En lançant la commande molecule --debug create j’ai compris que le fichier

instance_config.yml stockait les informations des instances. Mais quoi mettre

dedans ? En fait le minimum de données est indiqué dans la structure ci-dessous,

qui se trouve dans le fichier create.yml :

{ 'instance': "{{ }}", 'address': "{{ }}", 'user': "{{ }}", 'port': "{{ }}", 'identity_file': "{{ }}", }Si on regarde ces champs, on voit qu’il y a toutes les informations permettant de lancer un playbook ansible.

Donc parti de ce constat, j’ai construit un template ansible terraform qui en

sortie retournerait ces informations (peut-être trop).

Template terraform outscale

Pour le moment, je fais au plus simple et j’ajouterai par la suite des options comme :

- ajouter de règles de groupes de sécurité

- ajouter d’autres tags que name

- limiter les connexions à mon ip seule

- …

Le code :

terraform { required_providers { outscale = { source = "outscale/outscale" version = "{{ platform.provider_version }}" } }}

provider "outscale" { region = "{{ platform.region }}" access_key_id = "{{ platform.accessid }}" secret_key_id = "{{ platform.secretid }}"}

variable "vm_type" { type = string default = "{{ platform.instance_type }}"}

variable "run_name" { type = string default = "{{ run_id }}"}

variable "region" { type = string default = "{{ platform.region }}"}

variable "image_name" { type = string default = "{{ platform.image_name }}"}

variable "instance_name" { type = string default = "{{ platform.name }}"}

variable "ssh_user" { type = string default = "{{ platform.ssh_user }}"}

variable "ssh_port" { type = number default = "{{ platform.ssh_port }}"}

variable "authorized_range_ip" { type = list default = {{ platform.authorized_range_ip | to_json }}}

variable "private_key_path" { type = string default = "{{ platform.private_key_path}}"}

variable "public_key_path" { type = string default = "{{ platform.public_key_path}}"}

data "outscale_image" "image" {

filter { name = "image_names" values = [var.image_name] }}

resource "outscale_keypair" "ssh-keypair" { keypair_name = format("molecule-%s-%s",var.instance_name,var.run_name) public_key = file(var.public_key_path)}

resource "outscale_security_group" "security_group_molecule" { description = "Molecule" security_group_name = format("molecule-%s-%s",var.instance_name,var.run_name)}

resource "outscale_security_group_rule" "security_group_molecule_ssh" { flow = "Inbound" security_group_id = outscale_security_group.security_group_molecule.security_group_id rules { from_port_range = var.ssh_port to_port_range = var.ssh_port ip_protocol = "tcp" ip_ranges = var.authorized_range_ip }}

resource "outscale_vm" "my_vm" { image_id = data.outscale_image.image.id vm_type = var.vm_type keypair_name = outscale_keypair.ssh-keypair.keypair_name security_group_ids = [outscale_security_group.security_group_molecule.security_group_id] tags { key = "name" value = format("molecule-%s-%s",var.instance_name,var.run_name) }}

resource "outscale_public_ip" "my_public_ip" { tags { key = "name" value = format("molecule-%s-%s",var.instance_name,var.run_name) }}

resource "outscale_public_ip_link" "my_public_ip_link" { vm_id = outscale_vm.my_vm.vm_id public_ip = outscale_public_ip.my_public_ip.public_ip}

output "my_public_ip" { value = outscale_public_ip.my_public_ip.public_ip}

output "mv_vm" { value = outscale_vm.my_vm.tags}

output "instance_name" { value = var.instance_name}

output "ssh_user" { value = var.ssh_user}

output "identity_file" { value = var.private_key_path}

output "ssh_port" { value = var.ssh_port}

output "workspace" { value = var.run_name}Ce fichier template, je l’ai déposé dans le répertoire

molecule/default/templates/ sous le nom main.tf.j2.

Pour rappel, il est possible de créer plusieurs plateformes dans le fichier de config molecule :

---dependency: name: galaxydriver: name: delegatedplatforms: - name: Ubuntu image_name: "Ubuntu-22.04-2022.12.06-0" image_owner: "" - name: Rocky image_name: "RockyLinux-9-2022.12.06-0"provisioner: name: ansibleverifier: name: ansibleDonc j’ai créé modifié le fichier create.yml pour qu’il puisse créer plusieurs instances. Ce qui donne :

---- name: Create hosts: localhost connection: local gather_facts: false no_log: "{{ molecule_no_log }}" vars: default_assign_public_ip: true default_accessid: "{{ lookup('env', 'OUTSCALE_ACCESSKEYID') }}" default_secretid: "{{ lookup('env', 'OUTSCALE_SECRETKEYID') }}" default_instance_type: t2.small default_private_key_path: "{{ lookup('env', 'MOLECULE_EPHEMERAL_DIRECTORY') }}/id_ed25519" default_public_key_path: "{{ default_private_key_path }}.pub" default_ssh_user: outscale default_region: "eu-west-2" default_ssh_port: 22 default_user_data: '' default_provider_version: ">= 0.8.2" default_authorized_range_ip: ["0.0.0.0/0"]

platform_defaults: authorized_range_ip: "{{ default_authorized_range_ip }}" provider_version: "{{ default_provider_version }}" assign_public_ip: "{{ default_assign_public_ip }}" accessid: "{{ default_accessid }}" secretid: "{{ default_secretid }}" instance_type: "{{ default_instance_type }}" private_key_path: "{{ default_private_key_path }}" public_key_path: "{{ default_public_key_path }}" ssh_user: "{{ default_ssh_user }}" ssh_port: "{{ default_ssh_port }}" image_name: "" name: "" region: "{{ default_region }}"

# Merging defaults into a list of dicts is, it turns out, not straightforward platforms: >- {{ [platform_defaults | dict2items] | product(molecule_yml.platforms | map('dict2items') | list) | map('flatten', levels=1) | list | map('items2dict') | list }}

tasks: - name: Create ansible.builtin.include_tasks: terraform-create.yml loop: '{{ platforms }}' loop_control: loop_var: platform

- name: Test provisionned vm connection hosts: localhost connection: local gather_facts: false tasks:

- name: Test SSH Port available ansible.builtin.wait_for: host: "{{ item }}" port: 22 delay: 30 timeout: 300 state: started retries: 3 with_items: "{{ groups['molecule_hosts'] }}"Dans la première partie, je définis des valeurs par défaut qui sont surchargés

par la suite avec celles définies dans le fichier molecule.yml. Le premier play

de ce fichier ne fait que boucler sur les plateformes et lance un sous-playbook

terraform-create.yml dont voici le contenu :

---- name: Set run_id ansible.builtin.set_fact: run_id: "{{ lookup('password', '/dev/null chars=ascii_lowercase length=5') }}"

- name: Generate local key pairs community.crypto.openssh_keypair: path: "{{ platform.private_key_path }}" type: ed25519 size: 2048 regenerate: never- name: Create Terraform directory ansible.builtin.file: state: directory path: "{{ lookup('env', 'MOLECULE_EPHEMERAL_DIRECTORY') }}/terraform/{{ run_id }}" mode: "0755"- name: Create Terraform file from template ansible.builtin.template: src: templates/main.tf.j2 dest: "{{ lookup('env', 'MOLECULE_EPHEMERAL_DIRECTORY') }}/terraform/{{ run_id }}/main.tf" mode: "0664"- name: Provision VM {{ platform.name }} community.general.terraform: project_path: "{{ lookup('env', 'MOLECULE_EPHEMERAL_DIRECTORY') }}/terraform/{{ run_id }}" state: present force_init: true register: state- name: Create instance config file ansible.builtin.file: path: "{{ lookup('env', 'MOLECULE_EPHEMERAL_DIRECTORY') }}/instance_config.yml" state: touch mode: "0644"- name: Register instance config for VM {{ platform.name }} ansible.builtin.blockinfile: path: "{{ lookup('env', 'MOLECULE_EPHEMERAL_DIRECTORY') }}/instance_config.yml" block: "- { address: {{ state.outputs.my_public_ip.value }} , identity_file: {{ platform.private_key_path }}, instance: {{ platform.name }}, port: {{ platform.ssh_port }}, user: {{ platform.ssh_user }}, workspace: {{ run_id }} }" marker: '# {mark} Instance : {{ platform.name }}' marker_begin: 'BEGIN' marker_end: 'END'- name: Add to group molecule_hosts {{ platform.name }} ansible.builtin.add_host: name: "{{ state.outputs.my_public_ip.value }}" groups: molecule_hostsJe crée un répertoire avec un nom unique, qui stockera chaque fichier d’instance

terraform. Je dépose le rendu du template dans ce répertoire et je lance le

module terraform. En sortie, je récupère les infos nécessaires pour le fichier

instance_config.yml. J’utilise blockinfile pour créer chacun des blocks. Et

pour finir, j’ajoute la VM à un groupe ansible dynamique.

En sortie de boucle un second play permettant d’attendre que le port 22 des VM soit bien démarré.

Tests des commandes molecule login et converge

Allez, on provisionne deux machines (il faut être patient 2 minutes) :

molecule listINFO Running default > listINFO Running docker > listINFO Running ec2 > list ╷ ╷ ╷ ╷ ╷ Instance Name │ Driver Name │ Provisioner Name │ Scenario Name │ Created │ Converged╶───────────────┼─────────────┼──────────────────┼───────────────┼─────────┼───────────╴ Ubuntu │ delegated │ ansible │ default │ false │ false Rocky │ delegated │ ansible │ default │ false │ false

molecule createTASK [Create] ******************************************************************included: /home/vagrant/Projets/personal/ansible/roles/pyenv/molecule/default/terraform-create.yml for localhost => (item=(censored due to no_log))included: /home/vagrant/Projets/personal/ansible/roles/pyenv/molecule/default/terraform-create.yml for localhost => (item=(censored due to no_log))

TASK [Set run_id] **************************************************************ok: [localhost]

TASK [Generate local key pairs] ************************************************changed: [localhost]

TASK [Create Terraform directory] **********************************************changed: [localhost]

TASK [Create Terraform file from template] *************************************changed: [localhost]

TASK [Provision VM Ubuntu] *****************************************************changed: [localhost]

TASK [Create instance config file] *********************************************changed: [localhost]

TASK [Register instance config for VM Ubuntu] **********************************changed: [localhost]

TASK [Add to group molecule_hosts Ubuntu] **************************************changed: [localhost]

TASK [Set run_id] **************************************************************ok: [localhost]

TASK [Generate local key pairs] ************************************************ok: [localhost]

TASK [Create Terraform directory] **********************************************changed: [localhost]

TASK [Create Terraform file from template] *************************************changed: [localhost]

TASK [Provision VM Rocky] ******************************************************changed: [localhost]

TASK [Create instance config file] *********************************************changed: [localhost]

TASK [Register instance config for VM Rocky] ***********************************changed: [localhost]

TASK [Add to group molecule_hosts Rocky] ***************************************changed: [localhost]

PLAY [Test provisionned vm connection] *****************************************

TASK [Test SSH Port available] *************************************************ok: [localhost] => (item=142.44.35.234)ok: [localhost] => (item=142.44.41.97)

PLAY RECAP *********************************************************************localhost : ok=19 changed=13 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

INFO Running default > prepareWARNING Skipping, prepare playbook not configured.Petit tour dans le fichier /home/vagrant/.cache/molecule/pyenv/default/instance_config.yml.

# BEGIN Instance : Ubuntu- { address: 142.44.35.234 , identity_file: /home/vagrant/.cache/molecule/pyenv/default/id_ed25519, instance: Ubuntu, port: 22, user: outscale, workspace: osuyx }# END Instance : Ubuntu# BEGIN Instance : Rocky- { address: 142.44.41.97 , identity_file: /home/vagrant/.cache/molecule/pyenv/default/id_ed25519, instance: Rocky, port: 22, user: outscale, workspace: chzsi }# END Instance : RockyVous remarquez juste que j’ai juste ajouté une clé indiquant ou se trouve le fichier state de terraform. Cela va nous servir pour la destruction.

Test de connexion sur la machine Ubuntu :

molecule login --host UbuntuINFO Running default > loginWelcome to Ubuntu 22.04.1 LTS (GNU/Linux 5.15.0-56-generic x86_64)

* Documentation: https://help.ubuntu.com * Management: https://landscape.canonical.com * Support: https://ubuntu.com/advantage

System information as of Mon Mar 6 13:10:54 UTC 2023

System load: 0.587890625 Processes: 103 Usage of /: 16.7% of 9.51GB Users logged in: 0 Memory usage: 9% IPv4 address for eth0: 10.9.8.135 Swap usage: 0%

0 updates can be applied immediately.

The list of available updates is more than a week old.To check for new updates run: sudo apt update

The programs included with the Ubuntu system are free software;the exact distribution terms for each program are described in theindividual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted byapplicable law.

-bash: warning: setlocale: LC_ALL: cannot change locale (fr_FR.UTF-8)To run a command as administrator (user "root"), use "sudo <command>".See "man sudo_root" for details.

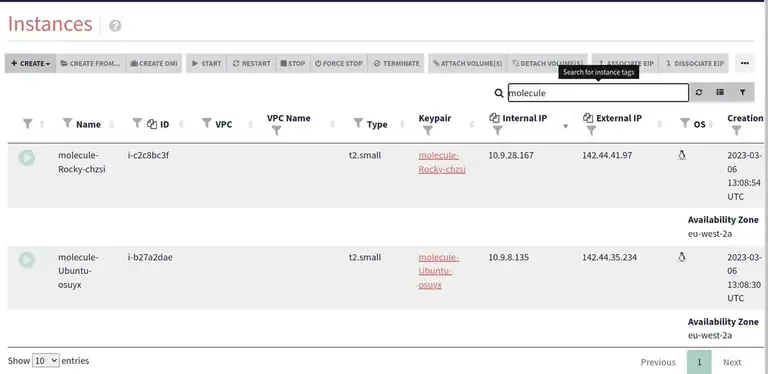

outscale@ip-10-9-8-135:~$Un petit dans le cockpit outscale :

Cela fonctionne. Pour tester converge, j’ai ajouté trois taches au rôle installant seulement un package :

---- name: Get Facts ansible.builtin.setup: filter: - 'ansible_os_family'

- name: Update cache on Ubuntu become: true ansible.builtin.apt: update_cache: true force: false autoclean: false autoremove: false allow_unauthenticated: false

when: ansible_os_family == "Debian"- name: Install a package for test become: true ansible.builtin.package: name: - python3-pip state: presentOn lance le converge :

molecule converge

INFO default scenario test matrix: dependency, create, prepare, convergeINFO Performing prerun with role_name_check=0...INFO Set ANSIBLE_LIBRARY=/home/vagrant/.cache/ansible-compat/4489a2/modules:/home/vagrant/.ansible/plugins/modules:/usr/share/ansible/plugins/modulesINFO Set ANSIBLE_COLLECTIONS_PATH=/home/vagrant/.cache/ansible-compat/4489a2/collections:/home/vagrant/.ansible/collections:/usr/share/ansible/collectionsINFO Set ANSIBLE_ROLES_PATH=/home/vagrant/.cache/ansible-compat/4489a2/roles:/home/vagrant/.ansible/roles:/usr/share/ansible/roles:/etc/ansible/rolesINFO Using /home/vagrant/.cache/ansible-compat/4489a2/roles/steph.pyenv symlink to current repository in order to enable Ansible to find the role using its expected full name.INFO Running default > dependencyWARNING Skipping, missing the requirements file.WARNING Skipping, missing the requirements file.INFO Running default > createWARNING Skipping, instances already created.INFO Running default > prepareWARNING Skipping, prepare playbook not configured.INFO Running default > converge

PLAY [Converge] ****************************************************************

TASK [Include pyenv] ***********************************************************

TASK [pyenv : Get Facts] *******************************************************ok: [Rocky]ok: [Ubuntu]

TASK [pyenv : Update cache on Ubuntu] ******************************************skipping: [Rocky]changed: [Ubuntu]

TASK [pyenv : Install a package for test] **************************************changed: [Rocky]changed: [Ubuntu]

PLAY RECAP *********************************************************************Rocky : ok=2 changed=1 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0Ubuntu : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0Et la destruction comment cela se passe t’il ?

Destruction des VM

Pour la destruction le principe est le même sauf que j’ai plus besoin du

template. En fait dans le fichier instance_config.yml j’ai stocké le dossier

où se trouve le state de terraform. Le code du fichier destroy.yml :

---- name: Destroy hosts: localhost connection: local gather_facts: false no_log: "{{ molecule_no_log }}" tasks: # Developer must implement. # Mandatory configuration for Molecule to function.

- name: Check instance config file exist ansible.builtin.stat: path: "{{ molecule_instance_config }}" register: file_exist

- name: Destroy VM when: file_exist.stat.exists block: - name: Load Instance config File ansible.builtin.set_fact: instance_conf: "{{ lookup('file', molecule_instance_config) | from_yaml }}"

- name: Destroy ansible.builtin.include_tasks: terraform-destroy.yml loop: '{{ instance_conf }}' loop_control: loop_var: platformOn reprend le même principe, on boucle sur les platforms pour lancer un

sous-playbook terraform-destroy.yml dont voici le contenu :

---- name: Destroy VM community.general.terraform: project_path: "{{ lookup('env', 'MOLECULE_EPHEMERAL_DIRECTORY') }}/terraform/{{ platform.workspace }}" state: absent- name: Destroy Directory ansible.builtin.file: path: "{{ lookup('env', 'MOLECULE_EPHEMERAL_DIRECTORY') }}/terraform/{{ platform.workspace }}" state: absentRien de bien compliqué non ?

On lance la destruction :

molecule destroyINFO default scenario test matrix: dependency, cleanup, destroyINFO Performing prerun with role_name_check=0...INFO Set ANSIBLE_LIBRARY=/home/vagrant/.cache/ansible-compat/4489a2/modules:/home/vagrant/.ansible/plugins/modules:/usr/share/ansible/plugins/modulesINFO Set ANSIBLE_COLLECTIONS_PATH=/home/vagrant/.cache/ansible-compat/4489a2/collections:/home/vagrant/.ansible/collections:/usr/share/ansible/collectionsINFO Set ANSIBLE_ROLES_PATH=/home/vagrant/.cache/ansible-compat/4489a2/roles:/home/vagrant/.ansible/roles:/usr/share/ansible/roles:/etc/ansible/rolesINFO Using /home/vagrant/.cache/ansible-compat/4489a2/roles/steph.pyenv symlink to current repository in order to enable Ansible to find the role using its expected full name.INFO Running default > dependencyWARNING Skipping, missing the requirements file.WARNING Skipping, missing the requirements file.INFO Running default > cleanupWARNING Skipping, cleanup playbook not configured.INFO Running default > destroy

PLAY [Destroy] *****************************************************************

TASK [Check instance config file exist] ****************************************ok: [localhost]

TASK [Load Instance config File] ***********************************************ok: [localhost]

TASK [Destroy] *****************************************************************included: /home/vagrant/Projets/personal/ansible/roles/pyenv/molecule/default/terraform-destroy.yml for localhost => (item=(censored due to no_log))included: /home/vagrant/Projets/personal/ansible/roles/pyenv/molecule/default/terraform-destroy.yml for localhost => (item=(censored due to no_log))

TASK [Destroy VM] **************************************************************changed: [localhost]

TASK [Destroy Directory] *******************************************************changed: [localhost]

TASK [Destroy VM] **************************************************************changed: [localhost]

TASK [Destroy Directory] *******************************************************changed: [localhost]

PLAY RECAP *********************************************************************localhost : ok=8 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

INFO Pruning extra files from scenario ephemeral directoryPetit tour dans le cockpit. Tout est rincé.

Plus loin

On voit que finalement le driver delegated de molecule ne demande que la

création d’un seul fichier instance_config.yml contenant les informations de

connexion ssh. Cela veut dire que l’on utilise toutes sortes de produits pour

provisionner ces machines, il faut juste en sortie récupérer les informations

nécessaires. Je lis pas mal d’articles qui mettent en opposition terraform

et ansible, mais moi, j’aime à dire qu’ils sont complémentaires.

Vous pouvez modifier le code pour vos besoins en changeant de provider terraform : gcp, ec2, …

Je vais publier le code d’ici aux prochains jours et vous pourrez m’aider à le compléter avec d’autres templates par exemple.